Video

Video

Agent Security and Prompt Injection: How to Safely Integrate AI Tools

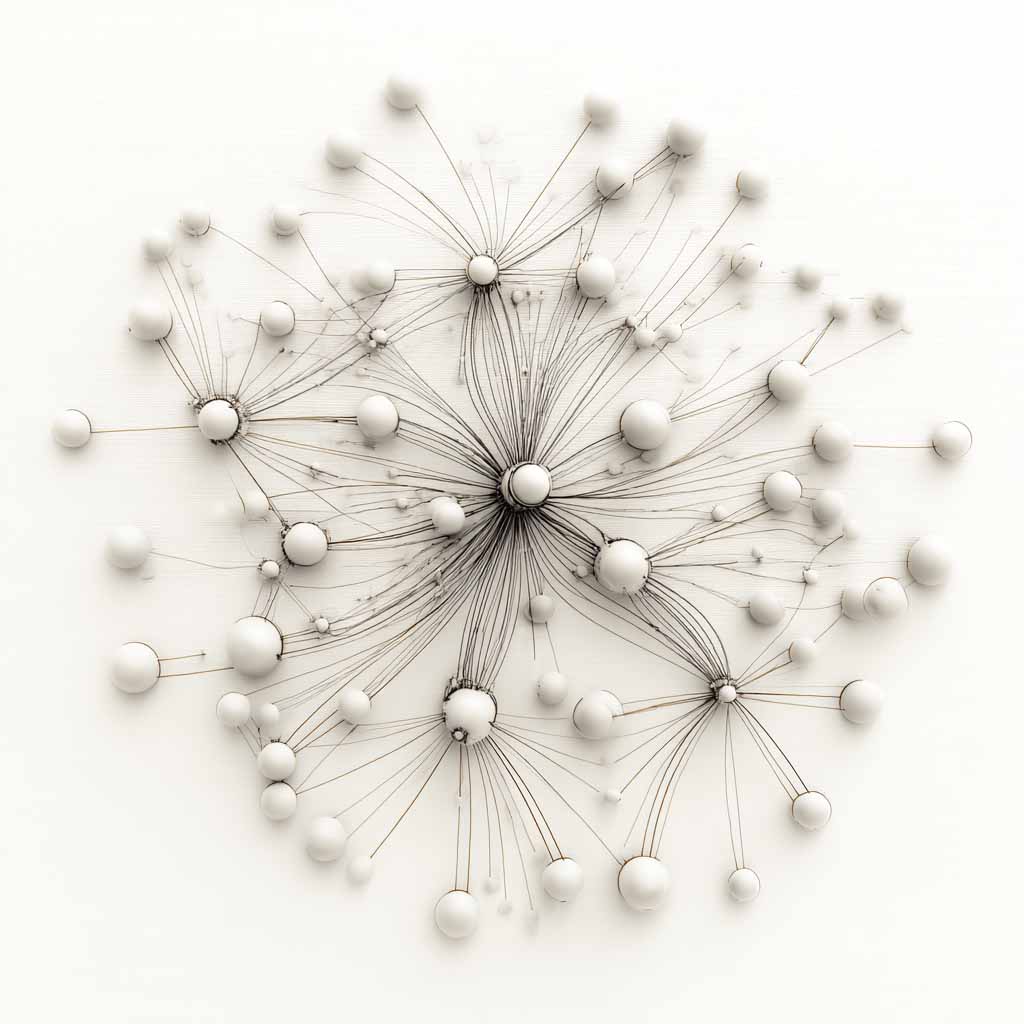

🛡️ Agent Security and Prompt Injection The capabilities of Large Language Models (LLMs) to control applications via tool calls (functions) are revolutionary. However, this introduces serious security risks, primarily from Prompt Injection. Prompt injection occurs when a user or outside data source (like a LinkedIn profile’s “About” section) injects malicious...